In medical AI, models don’t live in isolation. They are built, refined, validated, deployed, retired, and sometimes resurrected months later. The challenge isn’t just training a model—it’s keeping it alive, accountable, and useful in a clinical environment where infrastructure, regulations, and data flows can change overnight.

Too often, the lifecycle breaks down:

Data scientists remain locked in Jupyter notebooks with no clear path to production.

Model code and weights are scattered across personal machines or cloud buckets.

There is no versioning, no audit trail, and no reproducibility.

Promising models never reach the clinicians who could benefit from them.

The Gesund.ai MLOps Platform is not just a storage shelf for model artifacts. It is the operational backbone that makes models discoverable, deployable, and interoperable across sites and organizations—with hygiene, lineage, and governance baked in.

From Notebook to Production—Without Losing Anything

We support multiple pathways to get your models into the platform:

Containerized models from your own Docker registry or archives

Model zoos such as MONAI or the Gesund.ai ModelHub

Externally hosted APIs for models you manage outside Gesund.ai

Cloud-hosted AutoML deployments from platforms like AWS SageMaker

Registration isn’t a blind upload—you explicitly declare:

Inputs and outputs (modalities, shapes, formats)

Model parameters and runtime configuration

Authentication and execution permissions

From there, you can run live pre-deployment tests by sending sample medical images through the model before it is available for use anywhere on the platform. This prevents surprises before the model touches production workflows.

Infrastructure Built for Medical Inference

Running a single chest X-ray classification is very different from processing a whole-body MRI or thousands of gigapixel pathology slides.

That is why all workloads are delegated to Model Server instances—dedicated, secure inference services that handle scale, compliance, and hardware acceleration.

A Model Server can:

Use GPU acceleration when performance matters

Run on-premises inside hospital or lab firewalls

Scale horizontally across machines for high-throughput inference

Route workloads to the right hardware or location via distributed orchestration

A single Model Server instance can power different platform instances, and platforms can have several Model Servers registered.

Cross-Party Model Orchestration

In many cases, models and data do not live under the same roof—and sometimes, they are not even allowed to.

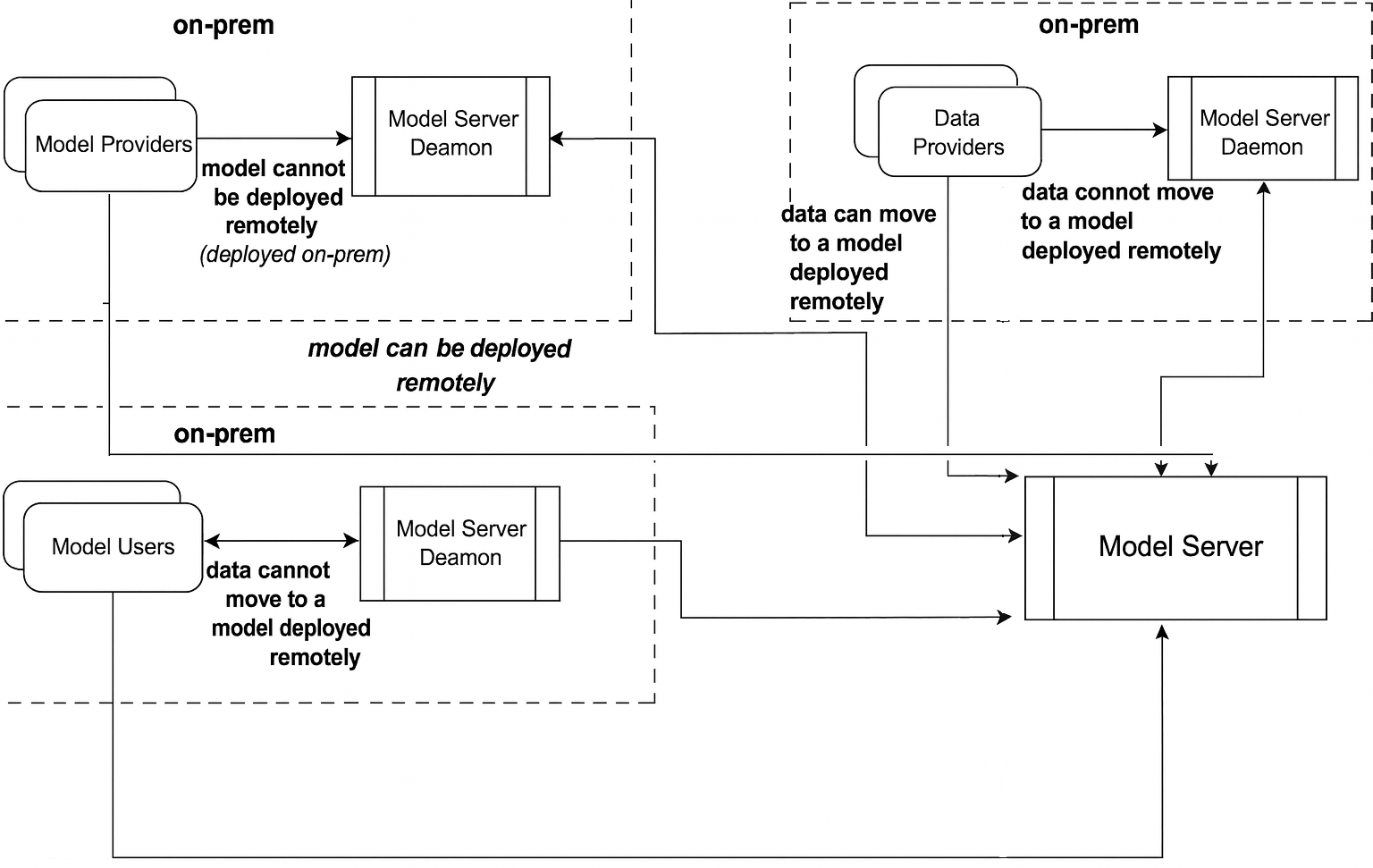

The platform’s design makes it possible for Model Providers, Data Providers, and Model Users to collaborate securely across organizational boundaries:

Model Providers register and deploy through a Model Server instance in their own secure environment. Models can remain local or be made available remotely through the central Model Server.

Data Providers decide whether their data stays local or is sent securely to remote models. If privacy rules require it, data is processed only via a co-located daemon.

Model Users can run evaluations under the same constraints—local if data cannot move, remote if permitted.

This architecture balances privacy, flexibility, and interoperability, enabling registered models to be discoverable, deployable, and testable across the ecosystem—without breaking compliance.

Connected Across Users, Sites, and Workflows

Once registered, models become first-class citizens within the platform, supporting collaboration while serving multiple purposes:

Pre-annotation: Link directly into an annotation project to prefill segmentations or detections, accelerating annotator workflows.

Validation: Compare predictions against ground truth annotations and visualize various metrics.

Cross-site sharing: Authorize other users or teams to use the model in their workflows. Data owners can run remote models on their data or test their own models against external datasets—without transferring protected health information.

Every execution, prediction, and configuration change is recorded, building model provenance and lineage so you always know which version of code, weights, and environment produced a given result.

Model Hygiene and Governance by Design

A good model in production is more than the right weights—it is a well-governed, auditable asset. The Models Module enforces:

Version control for both code and weights

Immutable audit trails for every action

Clear ownership and role-based access

Reproducibility so earlier predictions can be rerun under identical conditions

This prevents models from disappearing into untracked folders and ensures trust years after deployment.

Make Your Model Regulatory-Accelerator Ready

FDA’s recent announcement of the Regulatory Accelerator encourages earlier and more interactive engagement for software devices via Early Orientation Meetings (EOMs)—optional sessions focused on live workflow demos that help reviewers understand your device under review. Treat them as orientation, not mini-clearances.

What you can bring into an EOM, directly from our Models Module:

Bring-Your-Own-Model (BYOM) with external endpoint registration and auth selection.

Pre-deploy endpoint verification using representative studies/series to confirm the model is reachable and behaves as declared.

Submission-grade traceability: model card + lineage, declared inputs/outputs, parameter controls (e.g., disabling “high-quality” mode), and immutable execution logs.

Operational readiness: background health checks, offline status detection,idle timeouts, deployment-target selection, and event logs.

Access control for reviewer-only accounts; public vs. user-scoped models.

Clinical realism for the demo: 3D CT viewer; dataset → study → series selection; label selection (e.g., lung parts, liver); queued tasks with live status; instant overlay visualization.

Use the Accelerator Strategically

Anchor on workflow. Structure your EOM around clinical scenarios and hand-offs, not architecture slides.

Demo the exact build. Use version-locked environments and show how parameters affect outputs; keep logs visible.

Close with change control. Explain how post-market updates will be governed (e.g., rollback, parameters, monitoring)—the question reviewers will ask next.

Why Choose Gesund.ai

The most innovative models in healthcare often remain trapped in development environments—never making it into the clinical workflows where they could have the greatest impact. Gesund.ai changes that with a single, structured pathway from research to regulated, real-world use. Purpose-built for the unique demands of medical AI, our platform—anchored by the Models Module—operationalizes, governs, and scales models across their entire lifecycle.

Regulatory-Grade Infrastructure & Governance: Built to align with FDA, MDR, and GMLP requirements, the Models Module enforces version control for both code and weights, immutable audit trails, and role-based access—making every deployment inspection-ready without adding extra engineering burden.

End-to-End Lifecycle Management: From model registration and validation to deployment, monitoring, and post-market surveillance, every stage is traceable, reproducible, and auditable—closing the gap between research and clinical use.

True End-to-End Traceability: Every execution, configuration change, and prediction is logged, enabling exact result reproduction even years later—critical for regulatory defense, safety investigations, and ongoing clinical trust.

Privacy-First Architecture: Federated inference, co-located processing, and secure cross-site orchestration ensure your data never leaves compliant environments unless explicitly authorized. Models and data can interact without violating privacy regulations.

Seamless Multi-Source Registration: Whether your models originate from Docker containers, AutoML services, external APIs, or model zoos, the Models Module standardizes onboarding, enforces input/output compatibility, and verifies readiness with live pre-deployment testing.

Infrastructure-Agnostic Scalability: Dedicated Model Server instances handle workloads from high-throughput GPU processing to on-premises inference behind hospital firewalls, orchestrating resources so models always run on the optimal hardware in the right location.

Interoperability at Scale: Works seamlessly across hospitals, research centers, CROs, and AI vendors. Once registered, models can be used for annotation prefill, validation against ground truth, or secure cross-site sharing—turning standalone assets into collaborative tools.

Proven in Real-World Deployments: The platform is not just theoretical—it is already powering regulated medical AI solutions in production across imaging, diagnostics, and clinical decision support.

Gesund.ai vs. Other Platforms

Most platforms optimize for procurement and operations at provider sites. The Models Module in our platform is different: it is a regulatory-grade builder platform for sponsors and clinical product teams who must govern, evidence, submit, and operate their own models.

Open hubs (e.g. Hugging Face) focus on managed inference endpoints and ease-of-deployment. Radiology platforms (e.g. deepc, Incepto, CARPL, Blackford, Ferrum) aggregate portfolios, orchestrate multi-vendor apps, and monitor performance for provider deployments. Our module instead supplies the sponsor-grade evidence spine—demo-ready builds, lineage, logs, and controls—that map to FDA’s Accelerator/EOM expectations.

Category (examples) | Primary persona | Optimizes for | Typical scope | Where it stops | Our differentiator |

Open model hubs (Hugging Face) | Model devs | Discovery, hosting, endpoints | General AI | Not healthcare-grade provenance/audit | Submission-ready lineage, runtime controls, live demo evidence |

Radiology AI marketplaces (deepc, Incepto, CARPL, Blackford, Ferrum) | Provider orgs | Buying & running curated apps | Enterprise orchestration, monitoring, reporting | App-level ops; limited sponsor-side governance | BYOM + endpoint verification + model card + event logs + 3D clinical demo → EOM-ready |

Gesund.ai Models Module | Sponsors, RA/QA, clinical builders | Govern, evidence, submit, and operate your model | Build-to-clearance-to-post-market | N/A | Version-locked environments, audit trails, change-control narrative, reviewer-safe access |

With Gesund.ai, your models are not just stored—they are operationalized, monitored, and safeguarded for real-world medical use. The Models Module ensures that your data science work doesn’t stop at training—it lives, evolves, and delivers measurable impact in clinical workflows. And with our regulatory-first, interoperability-driven design, you are gaining not just a platform, but a long-term strategic partner in bringing AI safely and effectively into clinical reality.

Want to learn more about our platform? Book a demo!